Motivation

As most of us rely on JHOVE for file format validation, it is important to gain information about the quality of JHOVE’s validation skills – and, if inadequacies are detected, improve JHOVE. This can be done by tool benchmarking, as I already did with JPEG in 2016 and with TIFF earlier this year and as Johan did with the WAVE format.

Our Digital Archive currently holds almost 50.000 GIF files and we use JHOVE to validate them.

I have a sample of more than 48,000 GIF-files for this examination. 221 of these files are interesting in one of the following ways:

- Either JHOVE or one of the other tested tools flagged the file as invalid

- A self-built java-tool revealed that the file header was not correct

- A self-built java-tool revealed that the trailer was not correct

All the rest were just valid and not flagged by any of the tools as invalid, so I left those files out of the statistics within this blogpost.

Initially, I worked with only 1,000 files and could also check if there was something visibly wrong with the file and if it could be opened with one of the three following viewers: Internet Explorer, ImageMagick or Paint. This was not possible with the 48,000+ files, but I checked if there was a preview thumbnail for the image in the folder. Besides, I checked renderability for the 221 “interesting” files.

JHOVE and GIF

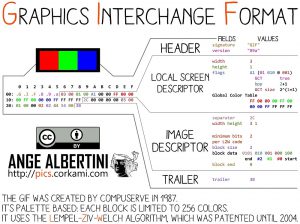

Graphic Interchange Format (GIF) does not seem to be that widespread in Digital Preservation. There is a JHOVE module which is able to validate GIF files, however, the GIF module is among the smaller of all the modules, but this might just be due to the simplicity of the GIF format.

| Files in module | Lines of code | JHOVE possible error responses | |

| GIF | 2 | 80 | 10 |

| TIFF | 61 | 14,468 | 68 |

| 61 | 10,548 | 152 | |

| JPEG | 9 | 948 | 13 |

GIF header & GIF trailer

Depending on the GIF flavor, a GIF file always has to start with one of the two following ASCII strings:

- GIF87 (released 1987)

- GIF89a (released 1989)

To be conform with its file format specification, a GIF always has to end with the HEX “3B”. This is how a valid trailer looks like:

![]()

An invalid trailer might look like this:

![]()

A common error here is that some of the sample files have not been fully uploaded or downloaded and there is something missing at the end. With complex file formats like PDF this usually leads to files which cannot be rendered any more. With the simpler GIF format, the files can still be opened, but often there was a visibly missing chunk:

Examination – Findings of the four tools

For my tests, I used GIF files from different sources, e. g. OPF govdocs, files from co-workers and from our own archive. The validity was checked with four tools:

ImageMagick (version 7.0.3-Q16)

NLNZ Metadata Extractor (version 3.6GA)

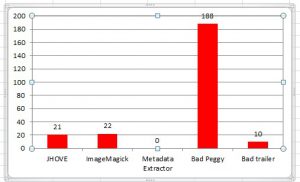

Of these four tools, only JHOVE and ImageMagick are really meant for GIF validation.

The Metadata Extractor is not for validation, but for metadata extraction. But a side effect is that it throws errors if a file is too broken to extract metadata properly.

Although Bad Peggy is mainly intended for JPEG validation, it also understands GIF (and png and bmp) images, as far as the java image IO understands these. The validation of GIF is not as extensive as for JPEG files and pretty much rudimental, but it was worth a try.

Within this blogpost, a file within the sample is considered valid, if there is nothing visibly wrong with the file and header and trailer are ok. I am aware that it could disobey its file format specification in another way, which is more difficult for me to detect, at least for now.

All files that either

- can’t be opened

- are visibly missing a chunk

- have an incorrect trailer

- have an incorrect header

are considered invalid within this blogpost.

So, how do I determine the quality of a validation tool? It depends on how I use it, of course.

Scenario 1: using a tool as the only validation tool there is in the Preservation system

In this case I do not want the tool to miss any invalid file. There should not be any false negatives (=invalid files that are not detected by the tool).

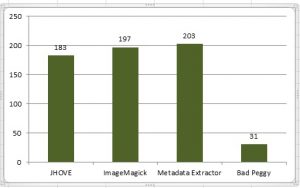

Well, in this case: Hands away from Bad Peggy! It misses 188 files, and considering the pool of “interesting” files in this sample is 221, this is a very bad job. As I have discussed in an earlier blogpost, Bad Peggy is best suited for JPEG validation. GIF validation does not seem to be the tool’s strength. Given that the name is “Bad Peggy” rather than “Bad Giffy”, this is not surprising.

I have included the “bad trailer” in this grapfic, as I wanted to test if all the files which cannot be rendered lack a valid trailer as well. Most of them do. However, 10 of them have a perfectly fine trailer, but either cannot be rendered at all or there is a visibly missing chunk of the image when it’s displayed.

JHOVE and ImageMagick are evenly matched here. In fact, they miss problems in almost the same files within the corpus. There are two files that JHOVE catches and ImageMagick misses (testfiles 30350.gif and 30400.gif) and one file that ImageMagick catches, but JHOVE misses (testfile 16533.gif). As this examination should serve to improve JHOVE’s validation skills, I have created a GitHub issue to document this case.

Scenario 2: not much staff

In this scenario, false positives (false alarms), files that are (or might be) perfectly valid, but are flagged as invalid by the tools are the thing I dislike most, as they cause me unnecessary work.

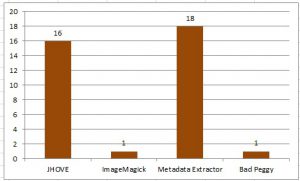

Well, JHOVE is not my favourite tool then, and neither is the Metadata Extractor. ImageMagick, meanwhile, rings the false alarm only once.

Scenario 3: I have the heart of a scientist and want the tools to just work and deliver correct findings

Both false negatives and false positives are unwanted in this scenario, I just want as many correct findings as possible.

Interestingly, the Metadata Extractor clearly wins here. Having no false negatives at all, even the 18 false positives cannot do much harm to the good output of the tool – only it is not really meant for validation.

ImageMagick still does a lot better than JHOVE, having only 1 false positive compared to JHOVE’s 16 false negatives.

Conclusion

JHOVE does not do so bad, but does not win the contest (yet), either. Of course, there is the possibility that the 16 presumably false positive files are indeed disregarding the file format specification in some way – in a way that does not affect the header, the trailer or the renderability in contemporary viewers, but might cause problems in the near future and bear a risk for long-term-availability.

The next step for me will be to build GIF files from scratch, mess with the structure and then test if JHOVE detects the error or not. This file test suite can then be used as a ground truth set and might be included in GitHub to enrich the regression testing for further JHOVE versions.

All findings can be found on google docs. As some of the files cannot be openly published, I cannot publish the GIF files. But I am willing to share interesting files via Email, just get in touch: [email protected]

December 5, 2017 @ 12:33 pm CET

Sounds very interesting. So I guess I can embed that in my usual java stuff? Cool.

Thanks Johan!

December 5, 2017 @ 12:29 pm CET

Another tool that’s worth checking out is giftext, which is part of the Giflib library:

http://giflib.sourceforge.net/giftext.html

I did a (very) quick check with a GIF of which I truncated the last 2 bytes, and this was picked up by giftext. (I expect the results will be similar to your ImageMagick results as IM uses Giflib for GIF.)