Blog – Learning by doing in digital preservation

Libraries, archives and museums have been extremely successful in preserving centuries old paper-based, cultural and scientific heritage. How well are they doing with the growing and rapidly ageing digital-based heritage?

This question has been haunting us (the digital preservation community) for a while now, even though the digital era has only just begun. We are still unsure about so many things: Are we keeping the right information? Should we be more selective? What is the right preservation strategy: safeguarding the original containers and carriers, transferring the data to long-lasting media, emulating the hardware before it becomes obsolete? Which metadata should we record? Et cetera.

At iPRES 2012, keynote speaker Steve Knight set the tone by observing that ”we are still asking the same questions as 10 years ago” and not making much progress. Paul Wheatly pointed to the duplication of effort in research projects and tool-building, and called it “a big fail”.

The conference proceedings do not reflect this discussion – they are a compilation of the papers that were accepted by the Scientific Program Committee – but you will find blogs and tweets that have captured the mood and voices of the participants. The concerns in the community are very real and deserve attention. In a series of blogs, I will attempt to address these concerns and to foster the informal conversation about the way forward.

Benchmarking

The concerns voiced at iPRES can be listed as follows: the gap between research and practice is too large; we need to move away from short-term project funding and move towards long-term investments; we start lots of initiatives and most of them do the same: there is too much duplication of effort for such a niche area and there is a lot of waste; we need to align ourselves and work together to achieve enough scale and to make the work more cost-effective. How do we know we are heading in the right direction? How can we measure progress? What are our benchmarks? How well do I perform in comparison with other digital archives and repositories? Et cetera. The many methods and tools developed over the past 10 years, for the audit, assessment and certification of “trusted digital repositories” are evidence of such concerns. Just tally the occurrence of the words “risk”, “standard” and “certification” in recent conference proceedings on digital preservation: you will be overwhelmed! And the sheer number of surveys carried out to determine the state of preservation practices is astonishing. Everyone is talking about benchmarking and how to become a trustworthy repository, but benchmarking is neither a goal in itself nor a research question.

Let us take a step back and try to understand better what it is we are trying to do.

How did we do it in the paper era?

For centuries, we have assured the preservation of books, journals, newspapers, music sheets, maps and many more paper-based containers of information. To this day, we are able to provide access to most of these materials and the information therein is still mostly human-readable. This is a Herculean achievement that has been possible only thanks to a continuous and dedicated process of learning and improvement over centuries. This was neither a scientific process nor a standard-setting process. Organizations that have proven to be trusted keepers of the paper-based heritage have done so on the basis of grass-root practices that have matured over hundreds of years. Today, these good practices are woven into the fabric of the memory institutions. The setting of standards did not have a play in this evolutionary development. Preservation standards and regulations appeared only very recently and in most countries, they have not (yet) been enforced. In the Netherlands, for example, the regulation of storage conditions in public archives was set as recently as 2002, but before that, most public archives already adhered to the requirements. Research into the degradation and embrittlement of paper only started in the 1930’s. It has made impactful progress in the past decades and is still ongoing, but it is a background process at library preservation programs.

What are we doing different now?

In digital preservation, most effort has been focused on research, modeling, risk assessment and standardization. This seems to indicate that we are proceeding in a different order: research is leading and applied to the design and engineering of processes and systems. Research informs the standard-setting process, the results of which are then put into practice on the ground. The way in which the OAIS-model has evolved from a reference framework (2002) into a recommended practice (2012) that underpins most audit and certification approaches to digital preservation, illustrates this very well. In contrast to the bottom-up development of good practices in the paper era, we are now trying to standardize “best practices” that have been developed by research, in a top-down fashion , very much along the principles of scientific management developed by Frederick Winslow Taylor (1856 -1915). In this order of things, there is very little room for feed-back from practitioners on the ground and for learning by doing.

Learning by doing and the importance of failure

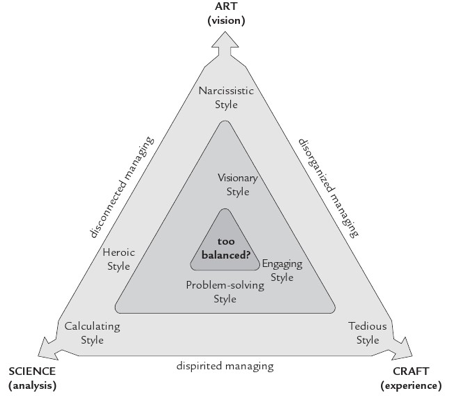

In quality management circles, it is widely accepted that the top-down approach does not work – not on the long-run and not for complex and ICT-dense systems. Henry Mintzberg (1939 -), who was critical of Taylor’s method, argued that effective managing requires some balanced combination of art (visioning), craft (venturing) and science (planning). This balance can only be achieved after years of experience and learning on the job.

Mintzberg’s managerial style triangle

Research cannot solve all the problems in advance. It was Joseph Moses Juran (1904 – 2008) who championed the importance of the learning process and who added the human dimension to quality management. Practitioners are part of the learning process: they have the skills sets and the work experience that can contribute to increased knowledge and improved workflows. Failure is also part of the learning process. Organizations should deal positively with failure because it leads to improvement. Worker’s participation in the continuous improvement of work processes was taken forward by Masaaki Imai (1930 – ) in his concept of “Kaizen”. William Edwards Deming (1900 – 1993) finally helped to popularize the concept of quality cycles, which is most commonly known as PDCA (Plan, Do, Check, Act). The notion that continuous improvement moves in repetitive cycles (also called iterations) was introduced some 20 years ago, in the software development industry – with the RUP process, Extreme Programming and various agile software development frameworks.

It is clear that digital preservation-as-a-process, evolving in an ICT-dense context, would benefit greatly from adopting the quality management approach of continuous improvement. In this approach the practitioners are driving the learning process and research is facilitating. OPF’s philosophy is based on this approach. OPF Hackathons bring together practitioners and researchers and aim to move the practice of digital preservation forward through “learning by doing together”.

January 15, 2013 @ 1:37 pm CET

John, thanks for your comments.

Yes I agree for many organizations it would be highly beneficial to buy and deploy a commercial system or cloud offering. Preferably something that comes closer to an out of the box digital preservation solution compared to most Open Source solutions.

Deploying Open Source Solutions requires a stable and highly skilled backoffice of developers and system adminstrators, which is often close to impossible or difficult to sustain for smaller organizations in the humanities.

My next blog will be about how the community can benefit from Open Source Software and organizational consequences.

January 11, 2013 @ 9:07 am CET

Bram, good artcile, thanks. I agree with the sentiment that we need to learn by doing whilst still not losing sight of the ongoing research and still being prepared to share results with similar organisations.

The move from research to production as many obstacles however. One is the fear of leaping into this new area without a thorough understanding of this emerging domain. Another is moving forward with research-grade software without a large team of developers to back you up. Also, there is the fear that the infrastructure costs are too large up front and require specialist skills that an archiving function may not have.

A solution to these is use of commercial systems rather than the open source / freeware that is relevent to the research domain. We provide this on your servers or on the cloud as you prefer whilst still encouraging the integration of research ideas from the good work that is done by researchers including OPF. See http://www.digital-preservation.com for more.

January 9, 2013 @ 2:57 pm CET

Hi Courtney,

Thanks for your comment.

Fully agree, agile and iterative methods really help. They are actually Digital Age incarnations of Quality Circles and PDCA (Plan, Do, Check, Act). Some of the pending challenges that I will focus on in future blogs are issues around Human Resources, Developing and sustaining competences and what collaboration can do for the community. The development process is important and a good methodology is crucial for quality of output. The main condition to make digital preservation a success is to have the right staff employed in a permanent role and not as project funded temporary employees. The first will enable deployment and maintenance of these fine developed technologies and practices, the latter will contribute to another perceived stand-still in development as indicated by Steve Knight. This is not a cultural shift on development practices and far more an organisational and HR challenge.

Fair Use Guidelines

January 7, 2013 @ 6:28 pm CET

Thank you, Bram, for this thoughtful post. There is a culture shift necessary in preserving institutions to more pragmatic, interactive methods of development. The open-source Archivematica* project employs the agile development method** in order to benefit from the community-wide experience of learning by doing and gettting feedback early and often to make the suite of software better. One of the biggest challenges we face is helping preserving institutions understand their own role in finding digital preservation solutions. Fortunately, many libraries and archives have been at least administratively attached to collaborative efforts for a long time, so we hope to inspire them to participate in this kind of next-level, active, “getting-your-hands-dirty” type of collaboration.

*https://www.archivematica.org/

**http://en.wikipedia.org/wiki/Agile_software_development